Approximation of the equilibrium payoff correspondence. More...

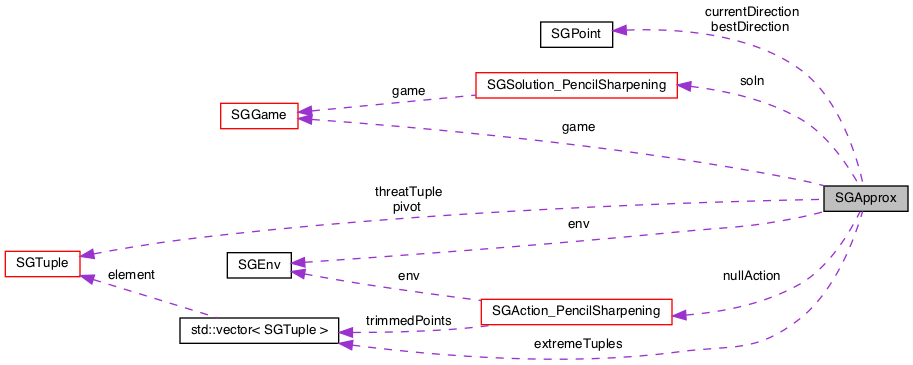

#include <sgapprox.hpp>

Public Member Functions | |

| SGApprox (const SGEnv &_env, const SGGame &_game, SGSolution_PencilSharpening &_soln) | |

| Constructor for SGApprox class. | |

| void | initialize () |

| Prepares the approximation for generation. More... | |

| int | getNumIterations () const |

| Returns the number of iterations thus far. | |

| int | getNumRevolutions () const |

| Returns the number of revolutions of the pivot thus far. | |

| int | getNumExtremeTuples () const |

| Returns the number of tuples in the extremeTuples array. | |

| SG::Regime | getBestRegime () const |

| list< SGAction_PencilSharpening >::const_iterator | getBestAction () const |

| const SGPoint & | getBestDirection () const |

| Returns the best test direction. | |

| const vector< const SGAction_PencilSharpening * > & | getActionTuple () const |

| Returns the current action tuple. | |

| const vector< SG::Regime > & | getRegimeTuple () const |

| Returns the current regime tuple. | |

| const SGPoint & | getDirection () const |

| Returns the current direction. | |

| const SGTuple & | getPivot () const |

| Returns the current pivot. | |

| const SGTuple & | getThreatTuple () const |

| Returns the current threat tuple. | |

| const vector< list< SGAction_PencilSharpening > > & | getActions () const |

| const vector< SGTuple > & | getExtremeTuples () const |

| Returns the array of extreme tuples. | |

| std::string | progressString () const |

| Returns a string indicating the algorithms progress. | |

| double | generate (bool storeIteration=true) |

| Refines the approximation. More... | |

| bool | passedNorth () const |

| Algorithm just passed north. More... | |

| void | end () |

| Destructor. More... | |

Private Member Functions | |

| void | updateMinPayoffs () |

| Calculates the minimum IC continuation values. More... | |

| void | calculateBindingContinuations () |

| Calculates binding continuation values. More... | |

| void | trimBindingContinuations () |

| Trims binding continuation values. More... | |

| void | findBestDirection () |

| Calculates the best direction. More... | |

| void | calculateNewPivot () |

| Calculates the new pivot. More... | |

| double | updatePivot (vector< double > &movements, vector< double > &changes, vector< SG::Regime > ®imeTuple, const vector< double > &maxMovement, const vector< SG::Regime > &maxMovementConstraints) |

| Updates the pivot. More... | |

| void | updateFlags () |

| Updates flags before the next iteration. More... | |

| double | distance (int newStart, int newEnd, int oldStart, int oldEnd) const |

| Calculates the distance between revolutions. More... | |

| double | distHelper (const SGPoint &p, const SGPoint &qA, const SGPoint &qB) const |

| bool | improves (const SGPoint ¤t, const SGPoint &best, const SGPoint &newDirection) const |

| Checks whether or not newDirection is shallower than best, relative to current. More... | |

| void | logAppend (ofstream &logfs, int iter, int rev, const SGTuple &tuple, int state, int action) |

| Outputs progress to the log file every iteration. | |

Private Attributes | |

| const SGEnv & | env |

| const SGGame & | game |

| SGSolution_PencilSharpening & | soln |

| const double | delta |

| const int | numPlayers |

| const int | numStates |

| std::ofstream | logfs |

| int | numIterations |

| int | numRevolutions |

| double | errorLevel |

| vector< bool > | facingEastNorth |

| bool | passNorth |

| bool | sufficiencyFlag |

| vector< bool > | updatedThreatTuple |

| vector< list< SGAction_PencilSharpening > > | actions |

| vector< SGTuple > | extremeTuples |

| SGTuple | threatTuple |

| SGTuple | pivot |

| SGPoint | currentDirection |

| vector< const SGAction_PencilSharpening * > | actionTuple |

| vector< SG::Regime > | regimeTuple |

| list< SGAction_PencilSharpening >::const_iterator | bestAction |

| SGPoint | bestDirection |

| SG::Regime | bestRegime |

| SGAction_PencilSharpening | nullAction |

| int | westPoint |

| int | newWest |

| int | oldWest |

Detailed Description

Approximation of the equilibrium payoff correspondence.

This class contains an approximation of the equilibrium payoff correspondence. At its core, it contains a sequence of extreme tuples that have been generated thus far, a pivot, and a direction. The main method, SGApprox::generate(), finds a new direction that will not intersect the equilibrium payoff correspondence and updates the pivot in that direction. By successively calling SGApprox::generate(), the approximation will be refined and asymptotically it will converge to the equilibrium payoff correspondence.

Part of the pencil sharpening algorithm.

Member Function Documentation

◆ calculateBindingContinuations()

|

private |

Calculates binding continuation values.

For each SGAction objection in SGApprox::actions, this method computes the extreme binding continuation values relative to the current threat tuple and the trajectory of the pivot on the previous revolution.

◆ calculateNewPivot()

|

private |

Calculates the new pivot.

After the best direction has been found, this method updates the pivot in the new current direction. First, it calculates the maximum movements in the current direction that would not violate incentive compatibility, and then iterates SGApprox::updatePivot until the pivot converges.

◆ distance()

|

private |

Calculates the distance between revolutions.

Returns the distance between successive iterations. Only runs when SGApprox::passNorth is true. Currently only runs when the number of SGTuple objects on successive revolutions is the same, and then takes the maximum over all distances between tuples that are in corresponding positions in the revolutions.

◆ end()

| void SGApprox::end | ( | ) |

Destructor.

Only purpose right now is to close the log file.

◆ findBestDirection()

|

private |

Calculates the best direction.

Iteraties over the SGAction objects in SGApprox::actions to find the shallowest admissible direction, and stores it in bestDirection.

◆ generate()

| double SGApprox::generate | ( | bool | storeIteration = true | ) |

Refines the approximation.

Main public routine for the SGApprox class. Updates minimum IC continuation values and binding continuation values, finds the best new direction, advances the pivot, and resets the flags. Also returns the distance between revolutions when a revolution is completed. Otherwise, returns 1.

◆ getActions()

|

inline |

Returns the array of SGAction objects that can currently be supported

◆ getBestAction()

|

inline |

Returns a constant iterator for the SGAction in which the best test direction was generated

◆ getBestRegime()

|

inline |

Returns the regime in which the best test direction was generated

◆ improves()

|

private |

Checks whether or not newDirection is shallower than best, relative to current.

Returns true if the cosine between newDirection and best is greater than SGEnv::improveTol, or if best and newDirection are colinear, whether or not best has a larger norm. Non-static so it can read the parameter values in SGApprox::env.

◆ initialize()

| void SGApprox::initialize | ( | ) |

Prepares the approximation for generation.

Opens the log file, constructs the actions array, initializes extremeTuples to a large "box" correspondence that contains the equilibrium payoff correspondence. Also initializes the pivot and the first direction. Sets the flags so that SGApprox::generate() will calculate new binding continuation values on the first pass.

◆ passedNorth()

|

inline |

Algorithm just passed north.

Indicates whether or not the current direction passed north on this iteration.

◆ trimBindingContinuations()

|

private |

Trims binding continuation values.

For each SGAction in SGApprox::actions, this method "trims" the binding payoff segents by intersecting them with the half-space correspondence that is below the pivot in the direction normal to the current direction. Not currently being used.

◆ updateFlags()

|

private |

Updates flags before the next iteration.

This method checks whether or not the threat tuple has increased and sets the flags for recalculating binding continuation values. Also checks whether or not the cardinal direction has changed, and updates SGApprox::facingEastNorth and SGApprox::passNorth.

◆ updateMinPayoffs()

|

private |

Calculates the minimum IC continuation values.

This method calculates for each SGAction object in SGApprox::actions the minimum incentive compatible continuation value, relative to the current threat tuple.

◆ updatePivot()

|

private |

Updates the pivot.

Bellman operator that advances the pivots corresponding to non-binding states in the current direction. If an IC constraint would be violated in state s, the movement in state s is set to maxMovement[s] and the state is put into the binding regime. Returns the distance the pivot moves.

Member Data Documentation

◆ actions

|

private |

actions[state] is a list of actions that can still be supported according to the current approximation.

◆ actionTuple

|

private |

actionTuple[state] is a pointer to the SGAction object that generates pivot[state].

◆ bestAction

|

private |

Pointer to the action profile that generates the shallowest direction.

◆ bestDirection

|

private |

The shallowest direction at the current iteration.

◆ bestRegime

|

private |

Indicates which incentive constraints were binding for the best direction.

◆ currentDirection

|

private |

The current direction.

◆ delta

|

private |

The discount factor, copied from SGApprox::game.

◆ env

|

private |

Constant reference to the parent environment.

◆ errorLevel

|

private |

Current error level

◆ extremeTuples

|

private |

Past trajectory of the pivot.

◆ facingEastNorth

|

private |

Indicates whether the current direction points east (if facingEastNorth[0]=true) and north (if facingEastNorth[1]=true).

◆ game

|

private |

Constant reference to the game being solved.

◆ logfs

|

private |

File stream for log file.

◆ newWest

|

private |

Index within SGApprox::extremeTuples of the westernmost tuple on the current revolution.

◆ nullAction

|

private |

Used to initialize the action tuple.

◆ numIterations

|

private |

Elapsed number of iterations.

◆ numPlayers

|

private |

The number of players, always 2.

◆ numRevolutions

|

private |

Elapsed number of revolutions.

◆ numStates

|

private |

The number of states, copied from SGApprox::game.

◆ oldWest

|

private |

Previous value of westPoint.

◆ passNorth

|

private |

Flag that is true if the algorithm switched from pointing south to pointing north on the current iteration.

◆ pivot

|

private |

Current pivot.

◆ regimeTuple

|

private |

regimeTuple[state] gives the manner in which pivot[state] was generated.

◆ soln

|

private |

Reference to the SGSolution object in which output is being stored.

◆ sufficiencyFlag

|

private |

Flag that indicates if sufficient conditions have been met while searching for the best direction.

◆ threatTuple

|

private |

Current threat tuple.

◆ updatedThreatTuple

|

private |

updatedThreatTuple[i] = true if player i's threat tuple was updated on the current iteration.

◆ westPoint

|

private |

Index within SGApprox::extremeTuples of the westernmost tuple on the previous revolution.

The documentation for this class was generated from the following files:

- src/hpp/sgapprox.hpp

- src/cpp/sgapprox.cpp